views

With artificial intelligence-driven content starting to occupy internet real estate, instances of deep fake videos, and other AI-generated misinformation campaigns are beginning to surface. These AI-generated fake videos not only pose a threat to authenticity but also herald a new era of misinformation—an era that many individuals may struggle to distinguish from reality.

Therefore, it is important that we recognise the sheer importance of the situation and learn to adapt ourselves to this growing threat. Additionally, deepfake imagery can be used to manipulate financial institutions and even craft elaborate scams.

In this article, let’s explore various ways that can help you identify deepfakes and AI-generated images to safeguard yourself from this threat.

Cross-check videos/images conveying important information or claims that could impact masses

If you come across a video of a popular politician discussing a new scheme on a platform like X, it is your responsibility to cross-check the video. Look for the original sources from which the video might have originated, and scrutinize any irregularities in its presentation. As a general rule of thumb, if something seems suspicious or too good to be true, it usually is. Therefore, it’s in your best interest to practice good information hygiene and verify information before sharing or believing it in this day and age.

AI-generated imagery may exhibit rendering inconsistencies and glitches

Despite the advances in AI technology, it is still limited by the computing power required to facilitate it. Deep fake videos and similar creations often contain irregularities, such as missed video frames, issues with how a face is superimposed onto another, and rendering glitches. Official announcements typically do not appear lifeless or devoid of emotion. So, if something seems unnatural or lacks emotion—make an effort to investigate further and determine the authenticity of the video or image.

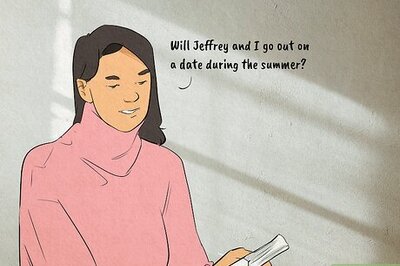

Match the voice to the entity they are claiming to represent

Suppose you come across a video of Jeff Bezos introducing a new Amazon Alexa feature that seems unrealistic. In such cases, it’s advisable to check the video for a robotic voice and compare it to verified sources. If the voice appears robotic or machine-like, it’s possible that the video was generated using AI.

Moreover, there have been numerous instances in which product-driven companies were adversely affected simply because false images of purported products were shared online. Therefore, it is crucial to consume content from social media with a grain of salt—or even better—to consume content from official sources.

Comments

0 comment